|

|

爬取补天厂商标题对应百度查找主域名的一个收集脚本[复制链接]

刀网成立两天了,没什么发的就发个爬虫脚本吧

使用的时候修改你访问补天网站的cookie就行

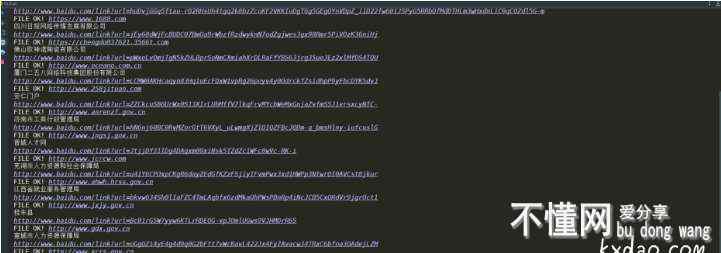

然后这是代码实例运行图

然后这是代码实例运行图

#coing=utf-8

#author iod iod

import requests,re,json

class butian(object):

def __init__(self, page):

self.page = page

self.butian_url = "http://loudong.360.cn/Reward/pub"

#self.proxies = {"http":"113.214.13.1:8000"}

self.data = {

"s":1,

"p":self.page,

"token":""

}

def bananer(self):

page = self.page

self.header = {

"Cookie":"", #COOKIE

"Host":"loudong.360.cn",

"Referer":"http://loudong.360.cn/Service",

"User-Agent":"Mozilla/5.0 (Linux; U; Android 5.1; zh-cn; m1 metal Build/LMY47I) AppleWebKit/537.36 (KHTML, like Gecko)Version/4.0 Chrome/37.0.0.0 MQQBrowser/7.6 Mobile Safari/537.36",

"Origin":"http://loudong.360.cn",

"Accept":"application/json, text/javascript, */*; q=0.01",

"Content-Type":"application/x-www-form-urlencoded; charset=UTF-8",

"X-Requested-With":"XMLHttpRequest",

"Accept-Encoding":"gzip, deflate",

"Content-Length":'14',

"Connection":"keep-alive",

"Accept-Language":"zh-CN,zh;q=0.8"

}

return self.header

def butianjson(self):

self.res = requests.post("http://loudong.360.cn/Reward/pub", headers = self.bananer(), data = self.data)

print self.res.content

self.content = json.loads(self.res.content)

result = []

for i in range(0, len(self.content["data"]["list"])-1):

result.append(self.content["data"]["list"]["company_name"])

return result

class baidu(object):

def __init__(self):

self.url = "https://www.baidu.com/s?ie=utf-8 |

|

默认

默认 晚霞

晚霞

雪山

雪山

粉色心情

粉色心情

伦敦

伦敦

花卉

花卉

绿野仙踪

绿野仙踪

加州

加州

白云

白云

星空

星空

薰衣草

薰衣草

城市

城市

简约黑色

简约黑色

简约米色

简约米色

龙珠

龙珠